How To Set Node_env

Production best practices: performance and reliability

Overview

This article discusses operation and reliability best practices for Express applications deployed to production.

This topic conspicuously falls into the "devops" world, spanning both traditional development and operations. Accordingly, the information is divided into two parts:

- Things to do in your code (the dev part):

- Use gzip compression

- Don't utilise synchronous functions

- Do logging correctly

- Handle exceptions properly

- Things to do in your environment / setup (the ops part):

- Set NODE_ENV to "production"

- Ensure your app automatically restarts

- Run your app in a cluster

- Cache request results

- Apply a load balancer

- Use a reverse proxy

Things to do in your code

Here are some things you lot can exercise in your code to improve your application's functioning:

- Use gzip compression

- Don't utilize synchronous functions

- Do logging correctly

- Handle exceptions properly

Use gzip compression

Gzip compressing can greatly decrease the size of the response body and hence increase the speed of a web app. Employ the compression middleware for gzip compression in your Express app. For example:

const pinch = require('pinch') const limited = require('limited') const app = express() app.use(compression()) For a high-traffic website in production, the all-time way to put pinch in place is to implement it at a reverse proxy level (meet Use a opposite proxy). In that case, you do not need to use compression middleware. For details on enabling gzip pinch in Nginx, come across Module ngx_http_gzip_module in the Nginx documentation.

Don't utilize synchronous functions

Synchronous functions and methods tie up the executing process until they return. A single call to a synchronous part might return in a few microseconds or milliseconds, notwithstanding in high-traffic websites, these calls add up and reduce the operation of the app. Avoid their utilize in product.

Although Node and many modules provide synchronous and asynchronous versions of their functions, always use the asynchronous version in production. The just fourth dimension when a synchronous office tin can exist justified is upon initial startup.

If you are using Node.js 4.0+ or io.js 2.1.0+, you tin can employ the --trace-sync-io command-line flag to impress a alert and a stack trace whenever your application uses a synchronous API. Of course, you wouldn't want to employ this in product, but rather to ensure that your code is fix for production. Come across the node command-line options documentation for more information.

Exercise logging correctly

In general, there are ii reasons for logging from your app: For debugging and for logging app action (essentially, everything else). Using console.log() or console.error() to print log messages to the terminal is common practice in development. But these functions are synchronous when the destination is a terminal or a file, and so they are not suitable for production, unless y'all piping the output to another programme.

For debugging

If yous're logging for purposes of debugging, then instead of using console.log(), use a special debugging module like debug. This module enables you to use the DEBUG environment variable to control what debug messages are sent to console.err(), if any. To proceed your app purely asynchronous, you'd notwithstanding want to pipe panel.err() to another program. But and then, yous're not really going to debug in production, are you?

For app activity

If y'all're logging app action (for example, tracking traffic or API calls), instead of using console.log(), use a logging library similar Winston or Bunyan. For a detailed comparison of these two libraries, see the StrongLoop web log post Comparison Winston and Bunyan Node.js Logging.

Handle exceptions properly

Node apps crash when they encounter an uncaught exception. Non handling exceptions and taking advisable actions will brand your Limited app crash and go offline. If y'all follow the advice in Ensure your app automatically restarts beneath, then your app will recover from a crash. Fortunately, Express apps typically take a short startup time. Nevertheless, yous want to avert crashing in the outset place, and to practice that, you need to handle exceptions properly.

To ensure y'all handle all exceptions, use the following techniques:

- Use effort-catch

- Employ promises

Before diving into these topics, you lot should have a bones understanding of Node/Limited error handling: using error-first callbacks, and propagating errors in middleware. Node uses an "error-start callback" convention for returning errors from asynchronous functions, where the first parameter to the callback function is the error object, followed by issue information in succeeding parameters. To indicate no error, laissez passer nix equally the offset parameter. The callback function must correspondingly follow the error-first callback convention to meaningfully handle the fault. And in Express, the best practice is to use the side by side() function to propagate errors through the middleware chain.

For more than on the fundamentals of fault handling, see:

- Error Handling in Node.js

- Building Robust Node Applications: Error Handling (StrongLoop blog)

What not to do

One thing you should not do is to mind for the uncaughtException event, emitted when an exception bubbling all the style back to the event loop. Adding an event listener for uncaughtException volition change the default behavior of the procedure that is encountering an exception; the process will continue to run despite the exception. This might sound similar a practiced mode of preventing your app from crashing, only continuing to run the app after an uncaught exception is a dangerous practise and is non recommended, because the state of the process becomes unreliable and unpredictable.

Additionally, using uncaughtException is officially recognized as crude. And so listening for uncaughtException is just a bad idea. This is why we recommend things like multiple processes and supervisors: crashing and restarting is frequently the most reliable way to recover from an error.

We also don't recommend using domains. Information technology generally doesn't solve the problem and is a deprecated module.

Apply endeavor-catch

Attempt-take hold of is a JavaScript language construct that you lot can use to catch exceptions in synchronous code. Use try-grab, for example, to handle JSON parsing errors as shown below.

Use a tool such as JSHint or JSLint to assist yous observe implicit exceptions like reference errors on undefined variables.

Hither is an example of using endeavour-take hold of to handle a potential process-crashing exception. This middleware role accepts a query field parameter named "params" that is a JSON object.

app.get('/search', (req, res) => { // Simulating async functioning setImmediate(() => { const jsonStr = req.query.params endeavor { const jsonObj = JSON.parse(jsonStr) res.send('Success') } take hold of (due east) { res.condition(400).ship('Invalid JSON string') } }) }) However, try-take hold of works simply for synchronous code. Because the Node platform is primarily asynchronous (specially in a production environs), attempt-take hold of won't grab a lot of exceptions.

Use promises

Promises will handle any exceptions (both explicit and implicit) in asynchronous code blocks that utilize and so(). Just add .take hold of(next) to the end of promise chains. For example:

app.get('/', (req, res, next) => { // exercise some sync stuff queryDb() .then((data) => makeCsv(information)) // handle information .then((csv) => { /* handle csv */ }) .catch(adjacent) }) app.employ((err, req, res, adjacent) => { // handle mistake }) Now all errors asynchronous and synchronous get propagated to the error middleware.

Even so, in that location are two caveats:

- All your asynchronous code must render promises (except emitters). If a particular library does not return promises, convert the base object by using a helper function similar Bluebird.promisifyAll().

- Event emitters (like streams) can still crusade uncaught exceptions. So brand certain you are handling the error event properly; for example:

const wrap = fn => (...args) => fn(...args).catch(args[two]) app.get('/', wrap(async (req, res, next) => { const company = await getCompanyById(req.query.id) const stream = getLogoStreamById(visitor.id) stream.on('error', next).pipage(res) })) For more information nearly mistake-handling by using promises, run into:

- Asynchronous Error Handling in Limited with Promises, Generators and ES7

- Promises in Node.js with Q – An Alternative to Callbacks

Things to do in your environment / setup

Here are some things y'all can do in your organization surround to improve your app's performance:

- Gear up NODE_ENV to "production"

- Ensure your app automatically restarts

- Run your app in a cluster

- Cache request results

- Use a load balancer

- Utilise a opposite proxy

Set up NODE_ENV to "production"

The NODE_ENV environment variable specifies the environment in which an application is running (usually, development or product). One of the simplest things you tin do to meliorate performance is to set NODE_ENV to "production."

Setting NODE_ENV to "production" makes Express:

- Cache view templates.

- Cache CSS files generated from CSS extensions.

- Generate less verbose fault messages.

Tests indicate that merely doing this can improve app functioning by a cistron of three!

If you demand to write environment-specific code, you can cheque the value of NODE_ENV with process.env.NODE_ENV. Be aware that checking the value of any surround variable incurs a performance penalty, and so should be done sparingly.

In development, you lot typically set environs variables in your interactive shell, for example by using export or your .bash_profile file. But in general you shouldn't practise that on a production server; instead, use your OS's init organisation (systemd or Upstart). The next section provides more than details nearly using your init organization in full general, but setting NODE_ENV is and then important for performance (and easy to do), that it'south highlighted here.

With Upstart, employ the env keyword in your task file. For instance:

# /etc/init/env.conf env NODE_ENV=product For more than data, come across the Upstart Intro, Cookbook and Best Practices.

With systemd, use the Environs directive in your unit file. For example:

# /etc/systemd/system/myservice.service Environment=NODE_ENV=product For more information, see Using Environment Variables In systemd Units.

Ensure your app automatically restarts

In production, y'all don't want your application to be offline, ever. This ways y'all demand to make sure it restarts both if the app crashes and if the server itself crashes. Although you hope that neither of those events occurs, realistically you must account for both eventualities by:

- Using a process manager to restart the app (and Node) when it crashes.

- Using the init system provided by your OS to restart the process manager when the Bone crashes. It's also possible to use the init system without a procedure director.

Node applications crash if they come across an uncaught exception. The foremost affair you lot need to do is to ensure your app is well-tested and handles all exceptions (see handle exceptions properly for details). Just as a fail-rubber, put a mechanism in place to ensure that if and when your app crashes, information technology will automatically restart.

Use a process manager

In development, you started your app just from the command line with node server.js or something similar. Only doing this in production is a recipe for disaster. If the app crashes, it volition be offline until yous restart it. To ensure your app restarts if information technology crashes, utilise a process manager. A process manager is a "container" for applications that facilitates deployment, provides high availability, and enables you to manage the awarding at runtime.

In add-on to restarting your app when information technology crashes, a process manager can enable you to:

- Gain insights into runtime functioning and resource consumption.

- Modify settings dynamically to improve performance.

- Control clustering (StrongLoop PM and pm2).

The most pop process managers for Node are equally follows:

- StrongLoop Process Manager

- PM2

- Forever

For a feature-by-characteristic comparison of the iii process managers, see http://strong-pm.io/compare/. For a more detailed introduction to all three, come across Process managers for Express apps.

Using any of these procedure managers will suffice to keep your application up, even if it does crash from time to fourth dimension.

However, StrongLoop PM has lots of features that specifically target production deployment. Yous tin can use it and the related StrongLoop tools to:

- Build and package your app locally, and so deploy it securely to your production arrangement.

- Automatically restart your app if it crashes for any reason.

- Manage your clusters remotely.

- View CPU profiles and heap snapshots to optimize performance and diagnose retention leaks.

- View performance metrics for your application.

- Easily scale to multiple hosts with integrated control for Nginx load balancer.

As explained below, when you install StrongLoop PM equally an operating system service using your init system, it volition automatically restart when the organisation restarts. Thus, it will keep your application processes and clusters alive forever.

Use an init system

The next layer of reliability is to ensure that your app restarts when the server restarts. Systems tin still go downwardly for a variety of reasons. To ensure that your app restarts if the server crashes, use the init system built into your Os. The two main init systems in use today are systemd and Upstart.

There are two ways to utilise init systems with your Limited app:

- Run your app in a process manager, and install the process manager every bit a service with the init organization. The process manager will restart your app when the app crashes, and the init organisation will restart the process manager when the Os restarts. This is the recommended approach.

- Run your app (and Node) directly with the init organization. This is somewhat simpler, but you don't become the additional advantages of using a procedure manager.

Systemd

Systemd is a Linux system and service manager. Nigh major Linux distributions have adopted systemd as their default init arrangement.

A systemd service configuration file is called a unit file, with a filename catastrophe in .service. Here'due south an case unit file to manage a Node app directly. Supercede the values enclosed in <bending brackets> for your system and app:

[Unit] Clarification=<Awesome Express App> [Service] Type=simple ExecStart=/usr/local/bin/node </projects/myapp/alphabetize.js> WorkingDirectory=</projects/myapp> User=nobody Group=nogroup # Environment variables: Environment=NODE_ENV=product # Allow many incoming connections LimitNOFILE=infinity # Allow core dumps for debugging LimitCORE=infinity StandardInput=null StandardOutput=syslog StandardError=syslog Restart=always [Install] WantedBy=multi-user.target For more data on systemd, come across the systemd reference (man page).

StrongLoop PM as a systemd service

You can easily install StrongLoop Procedure Manager as a systemd service. Subsequently you do, when the server restarts, information technology volition automatically restart StrongLoop PM, which will and so restart all the apps it is managing.

To install StrongLoop PM as a systemd service:

$ sudo sl-pm-install --systemd Then start the service with:

$ sudo /usr/bin/systemctl start strong-pm For more than information, see Setting up a production host (StrongLoop documentation).

Upstart

Upstart is a system tool available on many Linux distributions for starting tasks and services during system startup, stopping them during shutdown, and supervising them. Yous can configure your Express app or process manager as a service and and so Upstart will automatically restart information technology when it crashes.

An Upstart service is divers in a chore configuration file (also called a "chore") with filename ending in .conf. The following example shows how to create a job called "myapp" for an app named "myapp" with the main file located at /projects/myapp/index.js.

Create a file named myapp.conf at /etc/init/ with the following content (replace the bold text with values for your system and app):

# When to commencement the procedure start on runlevel [2345] # When to finish the procedure stop on runlevel [016] # Increase file descriptor limit to be able to handle more requests limit nofile 50000 50000 # Use production mode env NODE_ENV=production # Run as www-data setuid www-data setgid www-data # Run from inside the app dir chdir /projects/myapp # The process to start exec /usr/local/bin/node /projects/myapp/alphabetize.js # Restart the process if it is down respawn # Limit restart endeavor to 10 times within ten seconds respawn limit 10 10 Notation: This script requires Upstart 1.4 or newer, supported on Ubuntu 12.04-14.ten.

Since the job is configured to run when the system starts, your app will be started along with the operating organisation, and automatically restarted if the app crashes or the arrangement goes down.

Apart from automatically restarting the app, Upstart enables you lot to use these commands:

-

get-go myapp– Beginning the app -

restart myapp– Restart the app -

stop myapp– Terminate the app.

For more information on Upstart, come across Upstart Intro, Cookbook and Best Practises.

StrongLoop PM equally an Upstart service

You can hands install StrongLoop Process Manager equally an Upstart service. After you do, when the server restarts, it will automatically restart StrongLoop PM, which will so restart all the apps information technology is managing.

To install StrongLoop PM as an Upstart i.4 service:

$ sudo sl-pm-install And then run the service with:

$ sudo /sbin/initctl start strong-pm NOTE: On systems that don't support Upstart 1.four, the commands are slightly different. Run into Setting up a production host (StrongLoop documentation) for more information.

Run your app in a cluster

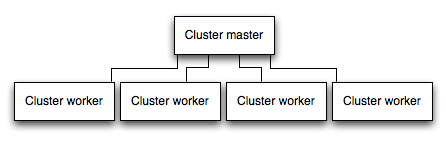

In a multi-core system, you lot can increase the performance of a Node app by many times by launching a cluster of processes. A cluster runs multiple instances of the app, ideally ane example on each CPU cadre, thereby distributing the load and tasks among the instances.

IMPORTANT: Since the app instances run as separate processes, they exercise not share the same retentiveness space. That is, objects are local to each instance of the app. Therefore, you lot cannot maintain state in the application code. However, you can employ an in-memory datastore similar Redis to store session-related information and country. This caveat applies to substantially all forms of horizontal scaling, whether clustering with multiple processes or multiple physical servers.

In clustered apps, worker processes can crash individually without affecting the rest of the processes. Apart from functioning advantages, failure isolation is another reason to run a cluster of app processes. Whenever a worker process crashes, always make sure to log the event and spawn a new process using cluster.fork().

Using Node's cluster module

Clustering is made possible with Node'due south cluster module. This enables a master process to spawn worker processes and distribute incoming connections amid the workers. However, rather than using this module directly, it's far better to utilize i of the many tools out in that location that does information technology for you automatically; for example node-pm or cluster-service.

Using StrongLoop PM

If you deploy your application to StrongLoop Process Manager (PM), then you lot can take reward of clustering without modifying your application code.

When StrongLoop Procedure Director (PM) runs an application, it automatically runs it in a cluster with a number of workers equal to the number of CPU cores on the system. Yous can manually change the number of worker processes in the cluster using the slc command line tool without stopping the app.

For example, assuming yous've deployed your app to prod.foo.com and StrongLoop PM is listening on port 8701 (the default), and so to set the cluster size to eight using slc:

$ slc ctl -C http://prod.foo.com:8701 set-size my-app eight For more data on clustering with StrongLoop PM, see Clustering in StrongLoop documentation.

Enshroud asking results

Some other strategy to improve the operation in product is to cache the result of requests, and then that your app does non repeat the operation to serve the same request repeatedly.

Use a caching server like Varnish or Nginx (see also Nginx Caching) to profoundly improve the speed and performance of your app.

Utilize a load balancer

No matter how optimized an app is, a single instance tin handle only a limited corporeality of load and traffic. One way to scale an app is to run multiple instances of it and distribute the traffic via a load balancer. Setting up a load balancer can improve your app'due south operation and speed, and enable it to scale more than is possible with a single instance.

A load balancer is usually a contrary proxy that orchestrates traffic to and from multiple application instances and servers. You tin easily set up a load balancer for your app by using Nginx or HAProxy.

With load balancing, y'all might take to ensure that requests that are associated with a particular session ID connect to the process that originated them. This is known every bit session affinity, or glutinous sessions, and may exist addressed by the suggestion in a higher place to use a data store such every bit Redis for session information (depending on your awarding). For a discussion, see Using multiple nodes.

Utilize a reverse proxy

A reverse proxy sits in forepart of a web app and performs supporting operations on the requests, apart from directing requests to the app. It can handle error pages, compression, caching, serving files, and load balancing amongst other things.

Handing over tasks that do not require knowledge of application country to a contrary proxy frees upwards Express to perform specialized awarding tasks. For this reason, it is recommended to run Express behind a reverse proxy like Nginx or HAProxy in production.

Source: https://expressjs.com/th/advanced/best-practice-performance.html

0 Response to "How To Set Node_env"

Post a Comment